SocialAI Research Group

Pedagogical Sharing of Social and Cultural Norms

Learning social norms and rules themselves, especially for children who do not yet have a large amount of personal experience, also poses a significant computational challenge. As a result, effective learning of critical elements of our highly complex social world—such as tools, technologies, rituals, and ideologies—requires knowledge to be culturally transmitted from one generation to the next. By relying on other social group members to teach important concepts, children can quickly and efficiently learn about their social and physical environments.

Human learners are psychologically predisposed to treat pedagogically transmitted information as privileged and more informative. Although this can lead to largely rapid and effective learning, especially regarding cultural norms, and can ensure children know about hazardous experiences without having to learn them through trial-and-error, these same processes can also prompt the development of strong stereotypes about groups of people they have never personally encountered.

Such pre-existing cultural knowledge may therefore serve as a double-edged sword, enabling adaptation to new environments under some circumstances, but hindering it in others. This line of research therefore seeks to understand the computational costs and benefits provided by the “storytelling” of cultural experts. By manipulating the information available to agents and the assumptions made by these agents about its informativeness and completeness, we can observe how these characteristics affect the cultural and epistemic value and robustness of repeated intergenerational transfer of beliefs.

Although any particular set of rules and regulations may be arbitrary, and different groups of people can choose to set these rules in different ways, having a shared system that all members of a group adhere to reduces the variance in group members’ thoughts and behaviors, rendering the social world more predictable.

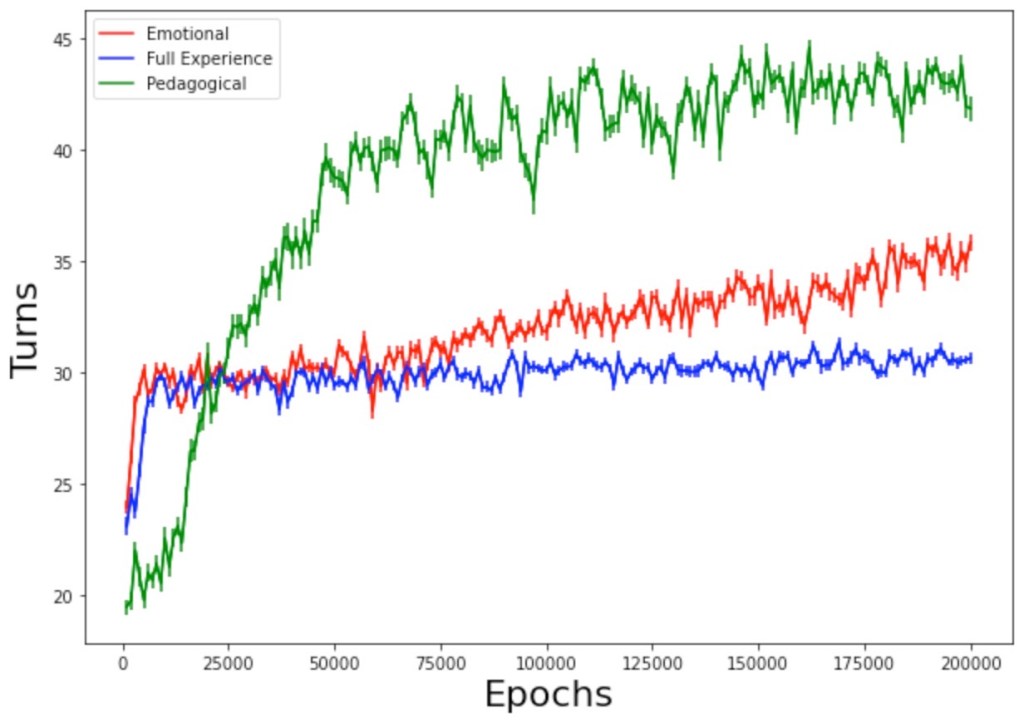

Culturally transmitted information can be especially useful when environmental rewards are sparse, and learning how to succeed in an environment requires successful simultaneous execution of multiple goals, such as avoiding a predator and finding food sources for oneself. We find that by learning from a pre-trained agent, in which successes on a foraging and predator-avoidance task are oversampled, agents are initially slower to learn; however, this learning quickly becomes more robust and capable of better performance in test environments than agents that learn purely from their own experiences.

SocialAIGroup 2022